Mobile Support for Rescue Forces, Integrating Multiple Modes of Interaction

1. Project’s Rationale

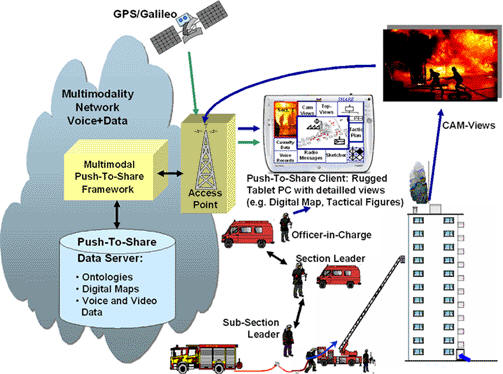

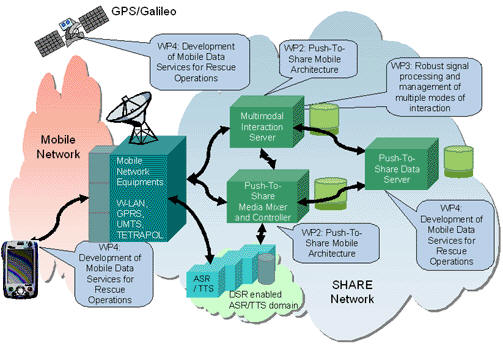

The SHARE project will develop a Push-To-Share advanced mobile service that will provide critical multimodal communication support for emergency teams during extensive rescue operations and disaster management. At present, emergency forces use half-duplex channel walkie-talkie technology and are restricted to simple push-to-talk voice communication. All the status information, reporting and documentation for decision making are processed manually. After the introduction of the new service, rescue operations will benefit enormously from sophisticated multimodal interaction and on-line, onsite access to data services providing up-to-date operation status information, as well as details concerning aspects of the emergency, such as location and environment.

Specific innovations that will be developed by the SHARE project include:

- Mobile system architecture design that enables bi-directional, multimodal communication using Push-To-Share technology

- Robust speech and image processing under extreme conditions

- Interactive digital maps with linked multimedia status information

- Structuring of required information using situation-dependent ontologies and multimedia data indexing capabilities

- Improvements and new applications of the upcoming (digital) trunked radio systems for police and rescue forces TETRA.

The SHARE project will address the mobile multimodal communication requirements of rescue forces, including their need for on-line access to data necessary for decision making. The Push-To- Share service developed by SHARE will combine advanced, innovative technologies to allow mobile workers to communicate naturally and bi-directionally, and to SHARE structured multimodal information resources, including audio, video, text, graphic and location information. The Push-To- Share service will be based on a new mobile Push-To-Share architecture and will incorporate a robust, intuitive multimodal user interface, an interactive digital map, location-based services and intelligent information processing and indexing. Emergency forces, especially units in charge of rescue management, will derive significant advantage from the communication and multimodal information support offered by this new mobile service.

The project concept will be integrated into a mobile communication infrastructure based on 2.5G, 3G (UMTS) and mobile WLAN networks. Services provided by SHARE will make available a full end-to-end solution that provides rescue teams with an invaluable mobile work environment that is intelligent, robust and easy-to-use.

2. Our Research Objectives

The main objective of this task is to provide tools for 2D image and video analysis that can be used for assisting a fire-brigade or another rescue team during and after the rescue operation. In a rescue operation there is a lot of visual information that can be analyzed automatically for providing assistance to the fire fighters and to the section/subsection leaders of the operation. The main idea is to acquire 2D images or videos from the place of the fire and transmit them to the main server for analysis. The images will be acquired using portable thermal cameras (e.g., fixed on the fire fighters’ helmet). Video analysis takes place in the main server and produces descriptions of the image analyzed. Desirable results are the detection of human presence in the image, the presence of fire focuses, localization of humans and fire focus as well as characterization of the fire in terms of size, spreading etc. This kind of information cannot be retrieved by the fire fighters without the use of a thermal camera. Especially in a fire/smoke environment where the visibility is limited and critical decisions must be taken during the operation. This information will help the section/ sub section leaders assess the situation and provide assistance and information to the fire fighters.

To achieve this functionality the first step is the integration of state-of-the-art techniques for 2D image analysis that can be used for analyzing images/videos acquired using a thermal camera in a fire/smoke environment. Techniques for human presence detection, object detection and characterization will be considered for providing a first prototype of the visual analysis module. Research on automatic visual analysis techniques for human presence detection, positioning of the fire focus in the scene and characterization will be also conducted. The images/videos are captured during the operation using portable thermal cameras and then they are transmitted to the main server where image analysis techniques are applied. Object detection and registration techniques will be developed and optimized on this purpose.

3. Project News

Project Meeting 6 – 7 June 2005 – Greece

A project meeting took place on 6 – 7 June 2005 in Thessaloniki – Greece. All partners attended the meeting that was hosted by AUTH.

Main goals of the meeting:

– presentation and discussion of project status

– planning: implementation of first demonstrator

– preparation of first EU audit that will take place in November