Abstract

2D convolutions play an extremely important role in machine learning, as they form the first layers of Convolutional Neural Networks (CNNs). They are also very important for computer vision (template matching through correlation, correlation trackers) and in image processing (image filtering/denoising/restoration). 3D convolutions are very important for machine learning (video analysis through CNNs) and for video filtering/denoising/restoration.

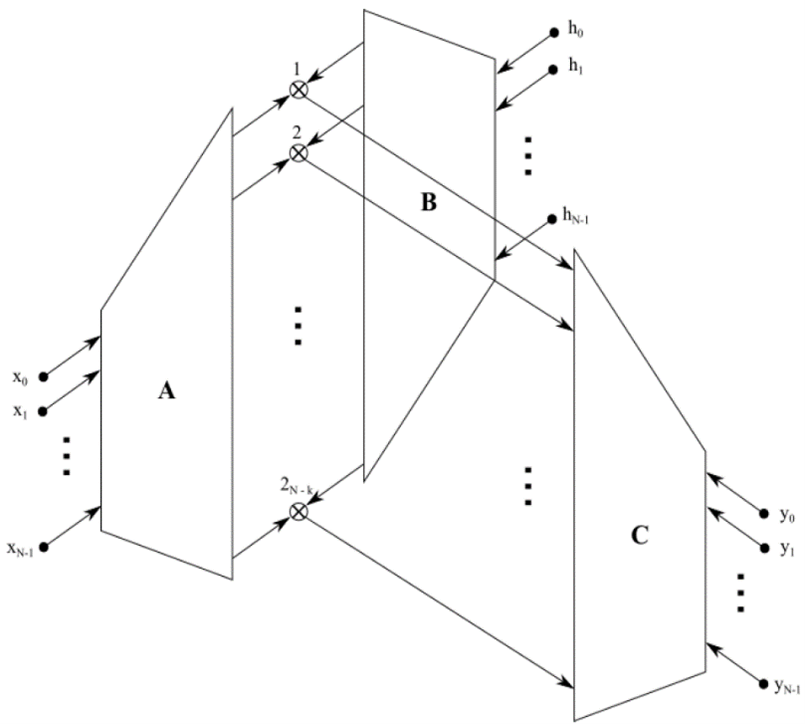

Therefore, 2D/3D convolution algorithms are very important both for machine learning and for signal/image/video processing and analysis. As their computational complexity is of the order O(N^4) and O(N^6) respectively their fast execution is a must.

This lecture will overview 2D linear and cyclic convolution. Then it will present their fast execution through FFTs, resulting in algorithms having computational complexity of the order O(N^2log2N). Optimal Winograd 2D convolution algorithms will be presented having theoretically minimal number of computations. Parallel block-based 2D convolution/calculation methods will be overviewed. The use of 2D convolutions in Convolutional Neural Networks will be presented.