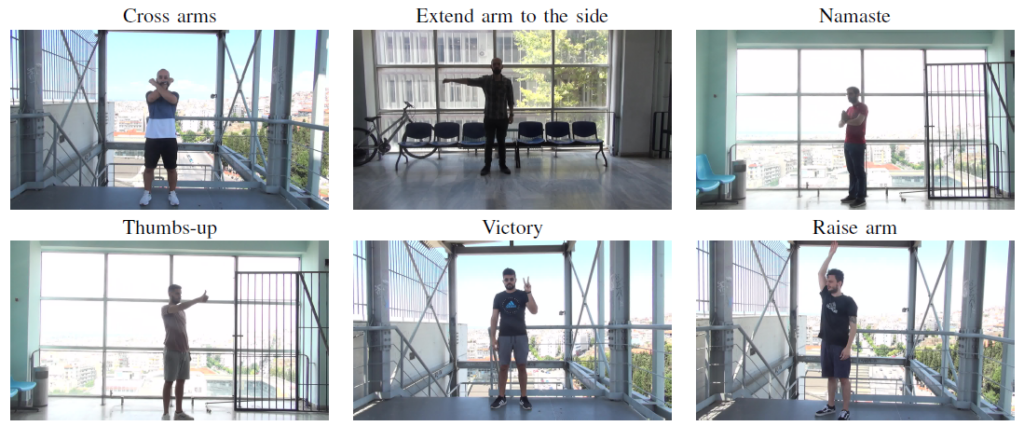

AUTH UAV Gesture Dataset

AUTH has created the “AUTH UAV Gesture Dataset” in the context of the “AERIAL-CORE” collaborative project, funded from the European Union’s Horizon 2020 research and innovation programme. The AUTH UAV Gesture Dataset contains videos depicting human subjects performing 6 classes of gestures. This dataset can be employed for training/evaluating gesture recognition machine learning models. It incorporates parts of the “UAV-Gesture” dataset (from the University of South Australia) and of the “NTU RGB+D” dataset (from the ROSE Lab). AUTH made the AUTH UAV Gesture Dataset available to researchers only in order to advance relevant academic research and assist exchange of information that promotes science. If one uses any part of these datasets in his/her work, he/she is kindly asked to cite the following three papers:

- F. Patrona, I. Mademlis, I. Pitas, “An Overview of Hand Gesture Languages for Autonomous UAV Handling”, in Proceedings of the Workshop on Aerial Robotic Systems Physically Interacting with the Environment (AIRPHARO), 2021 (DOI: 10.1109/AIRPHARO52252.2021.9571027).

- A. Shahroudy, J. Liu, T.-T. Ng, and G. Wang, “NTU RGB+D: A large-scale dataset for 3D human activity analysis”, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016 (DOI: 10.1109/CVPR.2016.115).

- A. G. Perera, Y. Wei Law, and J. Chahl, “UAV-GESTURE: A dataset for UAV control and gesture recognition”, in Proceedings of the European Conference on Computer Vision (ECCV), 2018 (DOI: 10.1007/978-3-030-11012-3_9).

In order to access the datasets created/assembled by Aristotle University of Thessaloniki, please complete and sign this license agreement. Subsequently, email it to Prof. Ioannis Pitas (using “AerialCore – AUTH UAV Gesture Dataset availability” as e-mail subject) so as to receive FTP credentials for downloading.